As someone in AI, which concept blew your mind away when you first learnt about it?

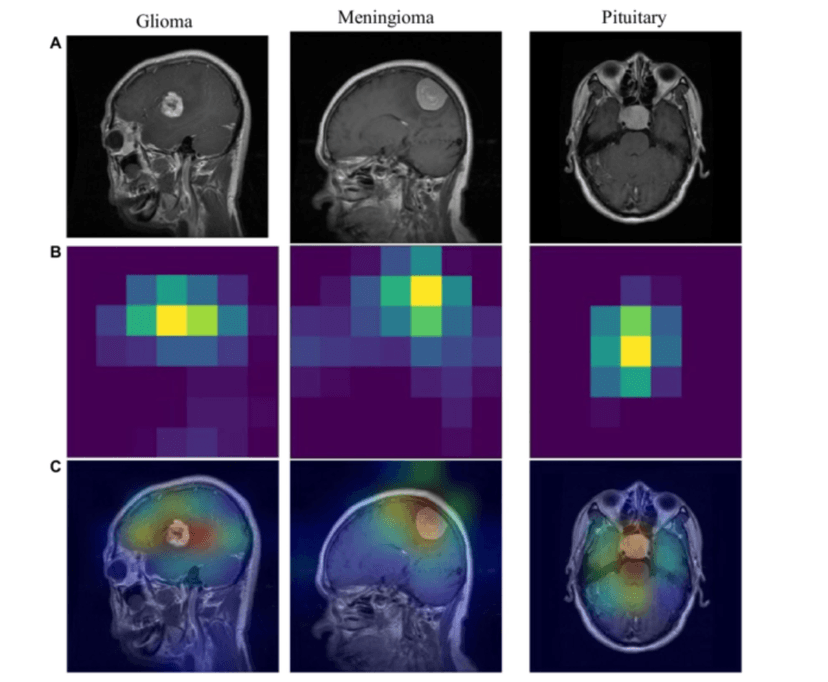

For me? It was GradCAM was a gamechanger at selling computer vision initiatives internally to the non-technical stakeholders.

The gradCAM function computes the importance map by taking the derivative of the reduction layer output for a given class with respect to a convolutional feature map. If you have a 5 layered convolutional neural network, then you can use this against any layer and check the class activation map.

The best explanation to give is: "Regions in Red are the areas of the image that the neural network is looking at to make a decision"

Well to be honest, regions in red represent the class activations arising from that region but, it would be too much for normie business guys.

One interview, 1000+ job opportunities

Take a 10-min AI interview to qualify for numerous real jobs auto-matched to your profile 🔑

Not AI but Principal Component Analysis blew my mind. Simple concept in matrices and so many uses in general DS, Statistics and AI

@tyrell PCA is genuinely such an amazing thing tbh. It changes the way you look at high dimensional data and the way you deal with curse of dimensionality.

diffusion models, how simple they are at core

For me it was something similar too. Just doing the cats vs dogs and the handwritten digit classifier CNN project blew my mind in 2nd year. I trained it on my CPU and I had no clue about anything. But it piqued my interest enough that I still read up so much about advancements in AI.

@TripsofAce That was so magical

Woah, what a coincidence. I WAS just now checking a github repo on CAM only. github.com/frgfm/torch-cam

@ScrawnyTin8 Now go and sell this hard to your stakeholders. 😂

Transfer learning. I remember I wasn't able to wrap my brain around for a while (I was mainly working on svm, trees and related stuff at that time) and it was tough to visualise a concept that params trained on one task can "increase" the accuracy of other totally different task (given that inputs are coming from more or less similar distribution). That too sometime with lesser data !

During my BTech , I studied about Singular Value Decomposition which was single handedly such an exciting thing to learn about. Here is that playlist: https://www.youtube.com/watch?v=gXbThCXjZFM&list=PLMrJAkhIeNNSVjnsviglFoY2nXildDCcv

I am genuinely curious in learning more about all of this. Will definitely try to learn more during the weekend.

For me, its a lot of things. But one of the first thing was BERT models when I was working on it back in 2020. Recently, I heard there is multilingual version of BERT, mBERT. Waiting to work on it. More recently, The concept of RAG, the neural seek integration in IBM Watson assistant. It's game changing on the chatbot scene.

@HonestGel6 RAGs are the new chatbots now.

When I used gradcam for the first time on yolov8

Gradcam is insane man.